4K & 8K Gaming and Energy Efficiency: How many pixels is too many?

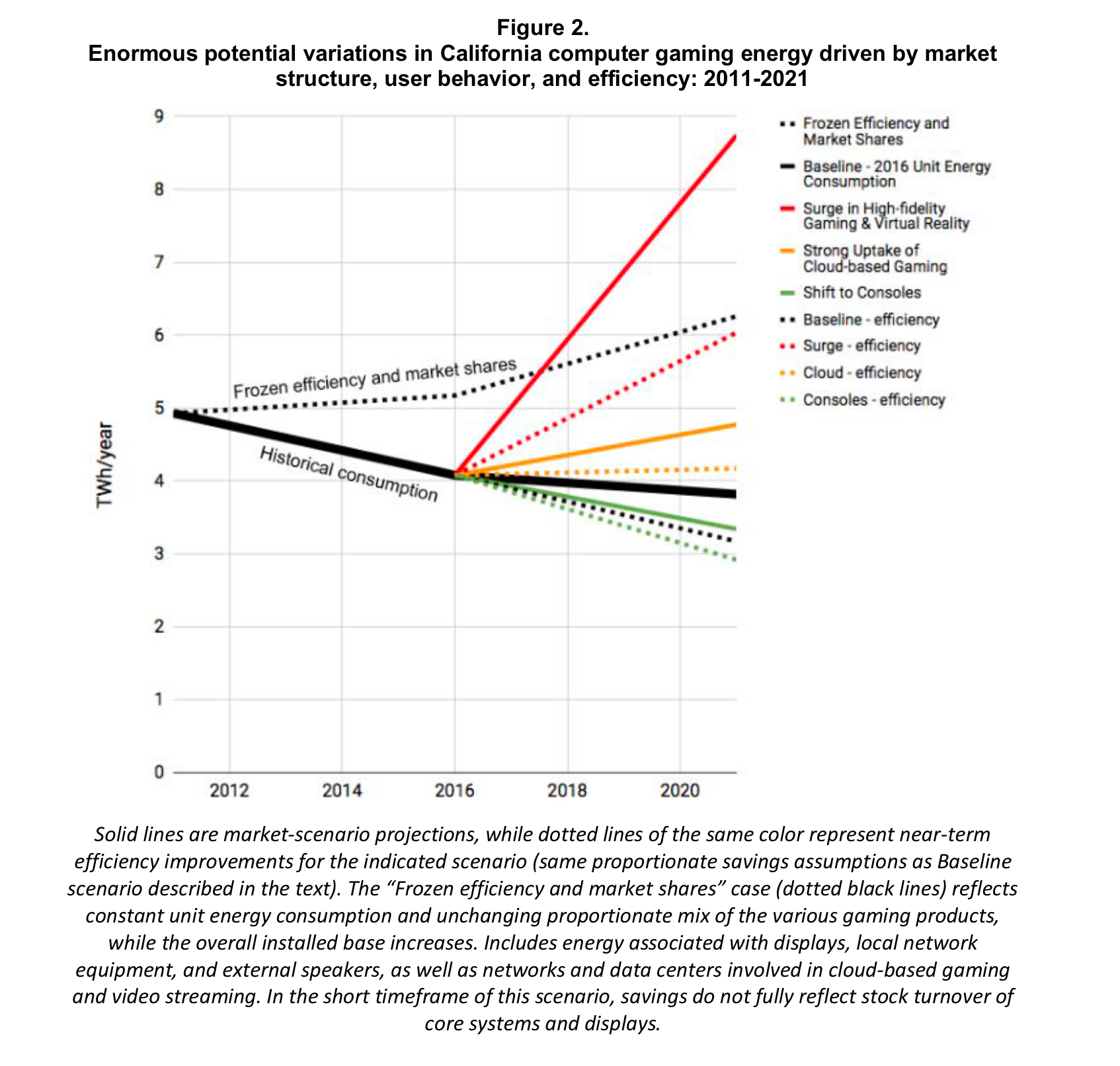

I’ve been thinking a lot about what sorts of reasonable limits or regulations we could place on gaming hardware to reduce energy consumption and emissions. I haven’t written extensively on it yet (though there are some sections that touch it in the book) but I am increasingly of the view that this is going to have to centre primarily on hardware, as it plays an outsized role in setting the bulk of the energy (and hence emissions) profile of the industry for the foreseeable future. This is rather than, say, trying to produce software controls that limit energy consumption – which just seems like it is a recipe for a lot of effort and very little reward. I’m also guided here by the work of Mills et al. (2018) who have done a lot of the groundwork establishing some baselines and dynamics (though they see more hope in software-based savings). Here’s Mills et al.’s (2018) projections for possible energy consumption from gaming in the state of California up to last year. Would love to see an update about how this ended up tracking.

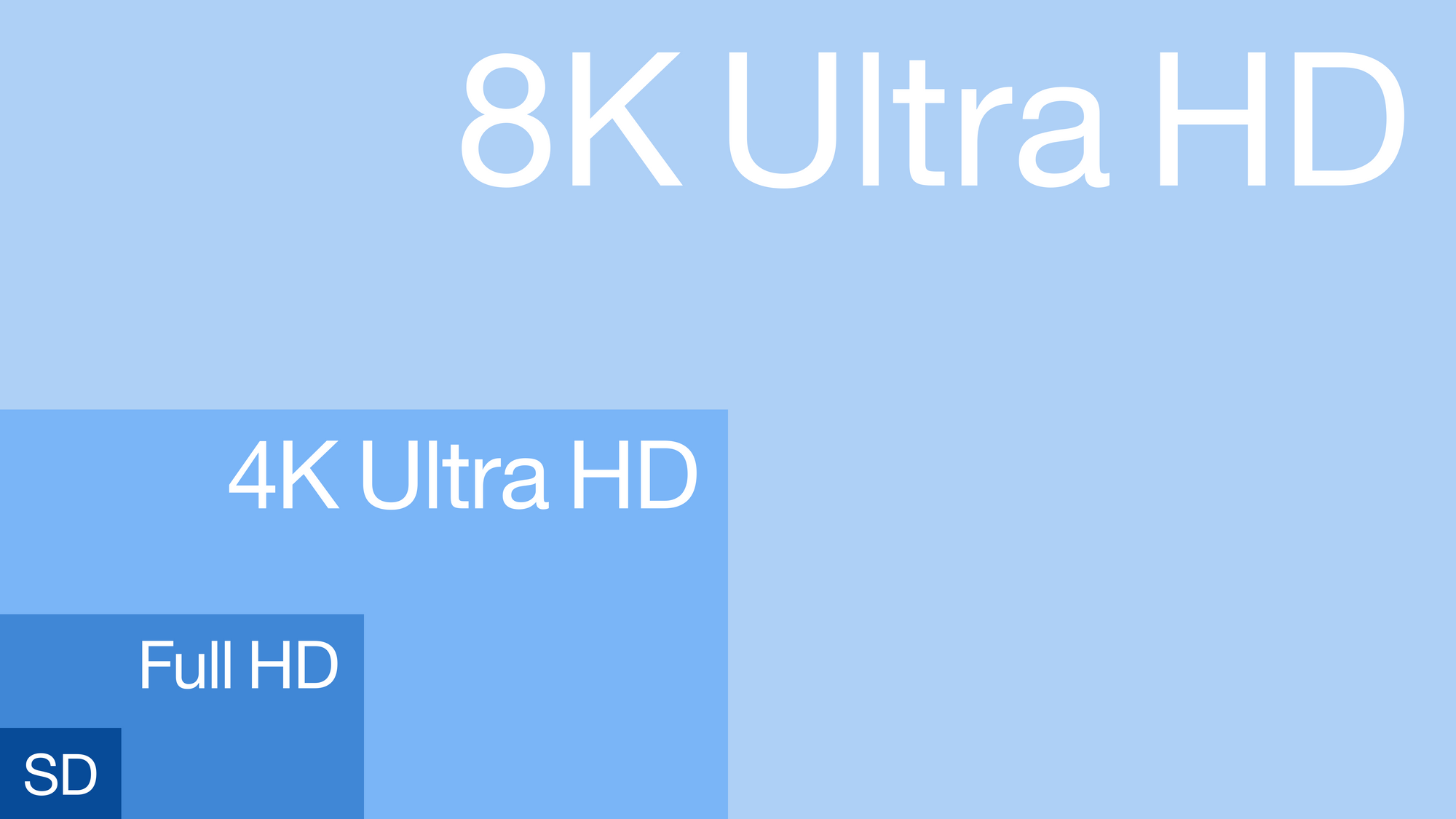

On of the findings of their study is that “high-resolution 4k displays… significantly elevate gaming system power.” (Mills et al. 2018 : 4) This is not particularly shocking, given a doubling of screen resolution results in a quadrupling of the number of pixels, substantially increasing the work required by the GPU. Likewise, a further doubling to 8K (as the latest consoles are capable of outputting) would similarly tax systems and demand greater energy consumption. This illustration from the Wikipedia page for 8K illustrates just how much of a jump it is:

For the purposes of bending the energy consumption curves downward, I am increasingly convinced it is worth pushing back on some of the (early as it may be) interest in 8K displays, as I am more and more convinced we are approaching a very real point of diminishing returns. If we can definitively say “8K is not worth it for the vast majority of use cases” that could allow us to draw a bit of a line in the sand at 4K screens and contribute to reducing the growth of energy consumption.

The success of Apple’s so-called ‘retina screen’ first popularised way back in 2010, provided the first guide to my thinking here, and it points towards something about the physical limitations of the human eye itself. Here’s how Steve Jobs explained it back when it was first announced:

It turns out there’s a magic number right around 300 pixels per inch, that when you hold something around to 10 to 12 inches away from your eyes, is the limit of the human retina to differentiate the pixels.

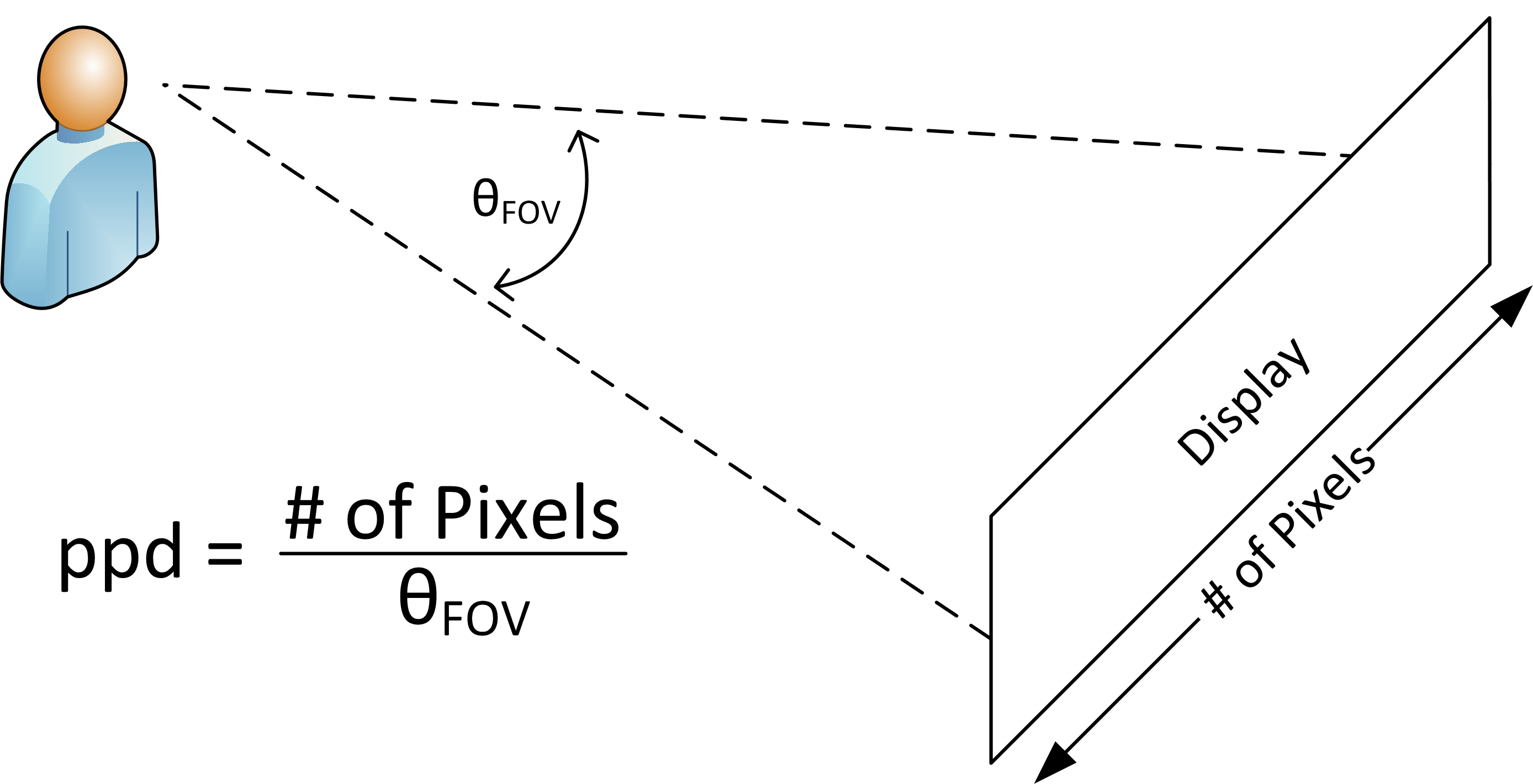

What he’s describing here is the average viewer with 20/20 vision and physical limit of their ability to resolve individual pixels below a certain size, relative to the viewing distance. Screens held in the hand actually have a harder job achieving this than those viewed from further away, like in living rooms. There is a relationship between pixel density (a combination of resolution and screen size) and the distance of the viewer from the object. This relationship can be described as pixels-per-degree. This diagram (via Texas Instruments) helps show what is being measured here:

In other words, for every 1 degree of a viewers vision, a certain number of ‘pixels’ will be present within that degree. The higher the count of pixels per degree, the less likely that the viewer is able to see ‘individual’ pixels. Wikipedia also explains it for us, noting that:

the PPD parameter is not an intrinsic parameter of the display itself, unlike absolute pixel resolution (e.g. 1920×1080 pixels) or relative pixel density (e.g. 401 PPI), but is dependent on the distance between the display and the eye of the person (or lens of the device) viewing the display; moving the eye closer to the display reduces the PPD, and moving away from it increases the PPD in proportion to the distance.

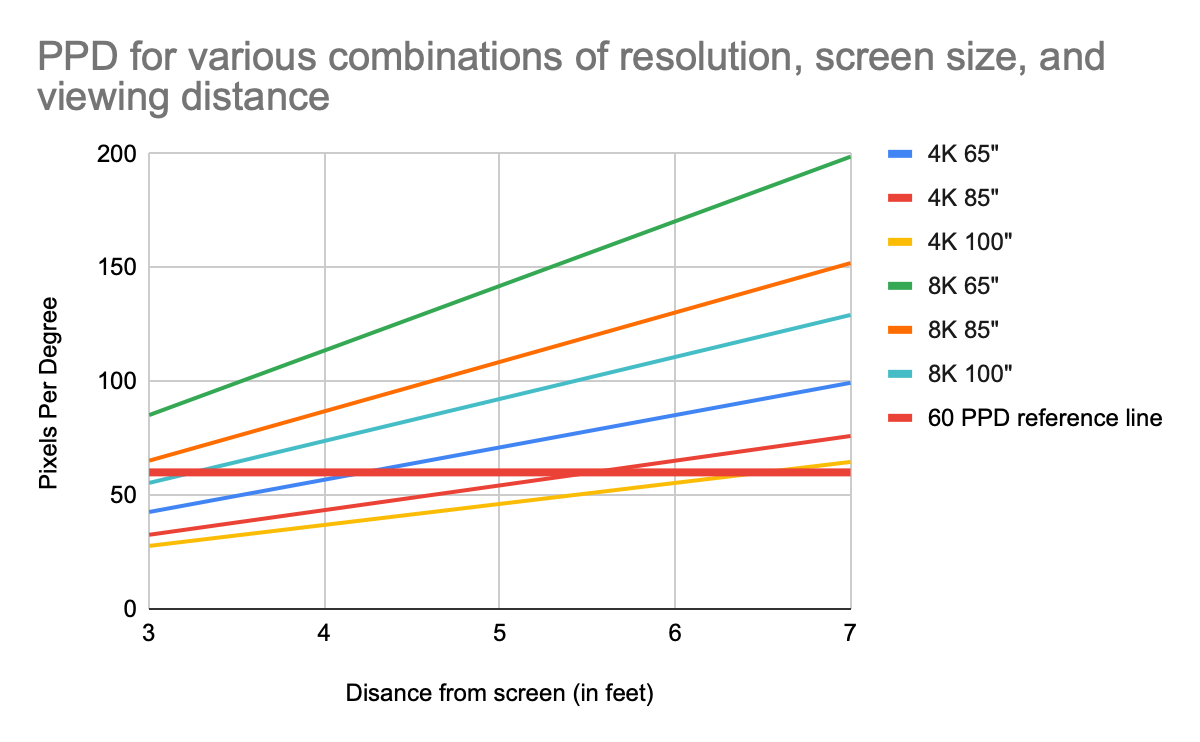

I found this useful little website that provides a PPD calculator – plug in values for the resolution (pixel count), the screen size, and the distance you’re viewing it from and it tells you a PPD value. You can also use it in reverse, putting in and 3 of the variables and having it calculate the fourth. Playing around with this shows us the dynamics of different combinations of resolution, screen size, and viewing distances – I did this with the goal of seeing if we can achieve the same effect as Job’s ‘magic number’ for retina screen iPhones.

I’ve tabled some values for 4K and 8K screens, assuming three different screen sizes (a 65”, 85” and 100” screen), 4K horizontal resolution of 3840 or 8K horizontal resolution of 7680), and I’ve provided PPD values for viewing distances from as low as 3 feet from the screen up to 7 feet.

The thicker horizontal red bar is the magic figure of 60 PPD – the requirement to be a ‘retina screen’ or approximately the limit above which the average human viewer can no longer distinguish individual pixels.

(Interactive version of the chart here)

What the chart shows is that unless you sit really, really close to your 4K screen or have a humongously big 100” screen that you still sit relatively close to (6.5ft away or less), then you are almost certainly already above the 60 PPD threshhold when viewing you television in an ordinary lounge room setting. For an an 8K screen there are even fewer scenarios where you might plausibly be able to identify individual pixels – you have to sit a ridiculous 3 feet away from a 100” screen. For the vast majority of users and use-cases (bascially the entire part of the graph above the horizontal red line) viewing an 8K TV from any reasonable distance away will produce very little appreciable difference to a 4K TV. Except in these extremely unlikely cases of massive screens, or sitting right up in front of the screen, there is little or no benefit from an 8K resolution.

To further emphasise this point, the website I used to produce these calculations helpfully provides reference figures from a variety of screen and picture standards bodies as well. Both of the ‘ideal viewing distance’ specified by 20th Century Fox for Cinema Scope pictures and the Society of Motion Picture and Television Engineers (SMPTE) recommend viewing distances which in every case push the viewer further back from the screen and above the 60 PPD threshold. The “Minimum Viewing Distance specified by SMPTE” for even the 65” screen is 5.7 ft – which comfortably places the 4K resolution TV well above our red line.

So what does all this mean. It means that for 4K resolution television screens, for any reasonable viewing distance and screen size combination, we have already achieved a ‘pixel structure’ that is basically indistinguishable to the average viewer with 20/20 vision. Whatever our screen size, for any reasonable viewing distance the PPD value is basically always above the ‘magic number’ that Jobs was describing as a ‘retina screen’ (Wikipedia actually notes that “based on Jobs' predicted number of 300 DPI and the expected viewing distance of a phone, the threshold for a Retina display starts at the PPD value of 57 PPD.” I'm using 60 here to be extra cautious).

Retinal neuoscientist Bryan Jones wrote about the retina display when it was first revealed in 2010, and how the interface between human biology and the size of pixels sets the level of detail we can actually discern. His conclusion was that ‘Apple’s claims stand up to what the human eye can perceive’ and that the rough benchmark of the ‘retina screen’ is a reasonably credible claim. True, there may be other qualitative difference that a higher PPD produces – such as a perception of increased clarity or sharpness – but we should be critical of such claims and demand serious evidence of them, especially when they come from marketers trying to sell new TVs. The jump from HD to 4K makes sense, producing appreciably better images. But I am not convinced that any future move to an 8K resolution standard will provide anything close to the same return on investment. What 8K screens will provide, however, are almsot inevitably greater demands on computational systems, on GPUs, and thus on overall energy.

8K gaming on consoles, done in ordinary living rooms conditions, as the graphs and data above shows, does not make sense, and should probably be resisted by both consumers and the industry. Instead, let this be one area where we accept some reasonable limits with minmal appreciable trade-offs in order to achieve goals related to overall energy consumption and emissions. If players are unlikely to notice a substantial improvement anyway, why not?