What’s going on with Nvidia GPUs and PC gaming power consumption

Welcome back, I hope you had a great break, ate some yummy food and enjoyed some time off. Unfortunately, the climate never takes a break, and as I write the multiple fires still threatening large parts of Los Angeles are at the forefront of my mind. Sending all my best wishes to any readers, their friends and family in or even near the affected areas, and as much relief and support as possible in the circumstances.

Before the fires took over the entire news cycle, news broke of the next generation of Nvidia GPUs launching in a couple of months, and the frankly brain-breaking potential power consumption (via TDP or thermal design power ratings – actually a measurement of the heat produced, but a decent proxy with some provisos). After some early leaks, Nvidia published the full specs on their website here. The numbers for the 5000 series GPUs are a step change beyond the already power-hungry 4000 series - which should concerns anyone who cares about energy efficiency and reducing power consumption of gaming.

It also prompted me to try and do some sums for PC gaming energy consumption (or at least, the GPU part of it) by drawing on the Steam hardware survey. It collects a simply huge amount of data on what sorts of GPUs are actually out there in people’s PCs, which is extremely helpful at getting a picture of what PC gaming energy consumption might look like these days.

To start, I pulled data from Steam’s hardware survey, specifically the charts for videocards, from this page here. It provides a list of each GPU as a percentage of the total Steam hardware survey (I’m assuming that the survey is representative of the entire Steam user base) which I copied into a spreadsheet. O yes! That’s right, it’s time for yet another glorious spreadsheet – you can take a look now and follow along, or check it out after (I've also linked it at the end).

From there, I added a column and TDP values for each GPU from Nvidia’s 'compare' page linked above, which helpfully provides values for each card across the last four generations. For the remaining GPUs in the Steam hardware survey, I drew on Wikipedia for their TDP ratings (particularly older cards, Laptop GPUs and specific Ti/Super versions). Then, I cut the full long list down to just the top 34 GPUs, cutting off any below <0.8% of the total survey sample. I also kicked out a few Intel and AMD cards (many of which didn’t have specific model numbers anyway) which leaves us with 78% of the total GPUs in the Steam hardware survey represented by Team Green, with associated TDP values for each.

Now we can apply some estimates for how much power each card would use in a year – I decided to assume that each card gets used for 1 hour per day, 7 days a week, for 365 days a year, which might be too high in reality but seems plausible (and you can easily tinker with these assumptions if you like - make a copy of the sheet and tweak column D). I also added a “capacity factor” selector – which applies a % scaler to reflect the fact that a card isn’t going to be consuming its maximum power all the time when in use. In the spreadsheet, you can change this selector ranging from 50% up to 100% and see the effect populate through the rest of the table.

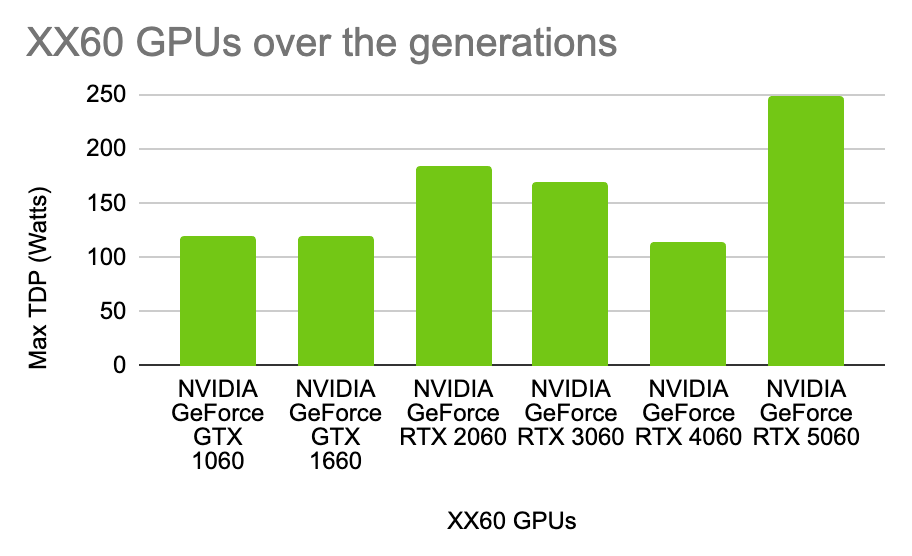

Before we get to consumption numbers though, let’s compare the TDPs of different generations of Nvidia card and see if there are trends. Here’s a chart of every bottom-tier GTX/RTX GPU for the past few gens (the ones that are still appearing in the steam hardware survey anyway) – all the GPUs that end in xx60:

We had something good going there for a while, Nvidia, at the entry-level! There’s my cute little 4060 in there (which I love), reverting to the mean, but no, you had to make the 5060 a monster with a TDP similar to an entire PS5. Brutal, worrying stuff.

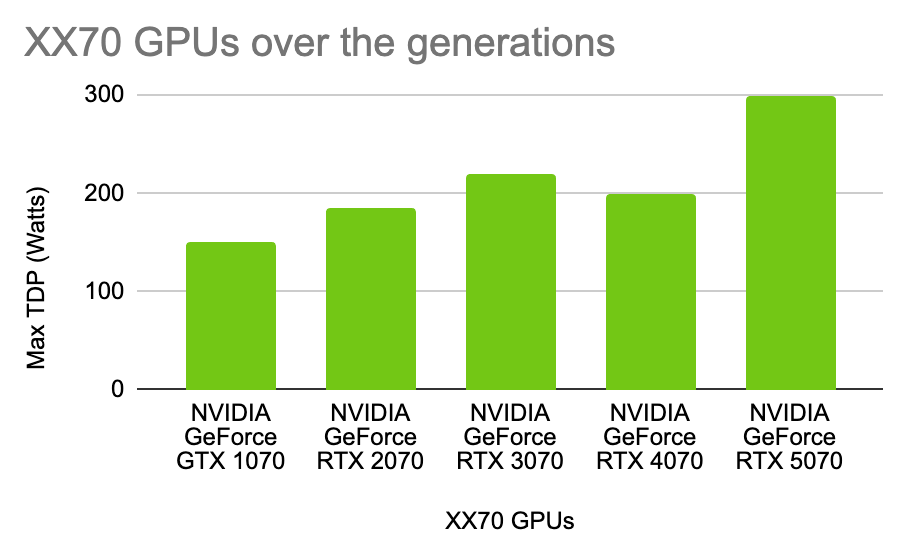

Next the xx70s – the mid-tier, usually good price-to-performance cards, the dependable workhorses of the graphics world.

A trend is somewhat observable here - with the 4070 dipping back from the excess of the 3070. But gone is the moderation of the past, now we’re in straight bonkers territory. The 5070 alone is more power-hungry than a PS5. Add in a powerful CPU, RAM, SSD and other system components and Nvidia’s total system PSU recommended for the 5070 is 650 watts. Yeeeeowzza.

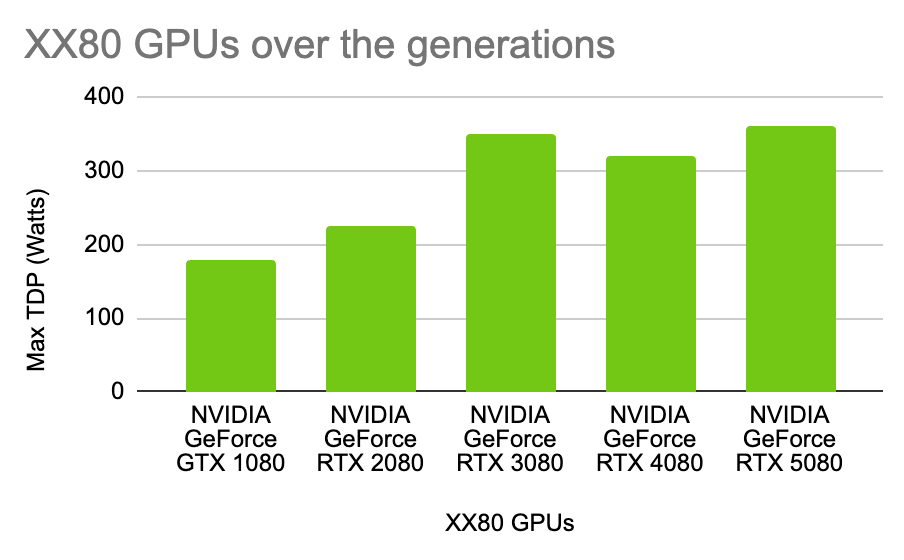

Next, the xx80s – the “PC master race” tier of graphics cards. Expensive, but not quite obscene.

Perhaps what we’re starting to see here from the 4080 onwards is more the result of Nvidia’s marketing shenanigans. I could be misremembering, but I think when it came out reviewers of the 4080 were a bit underwhelmed (or was that the 3080? I am a bit out of touch). Looking at the power numbers I am underwhelmed. At least the 5080 is only keeping pace with the 3080? If you’re looking for a silver lining, maybe that’s it… Nvidia recommends total system power for the 3080 of 850 watts. That’s a beefy, expensive machine.

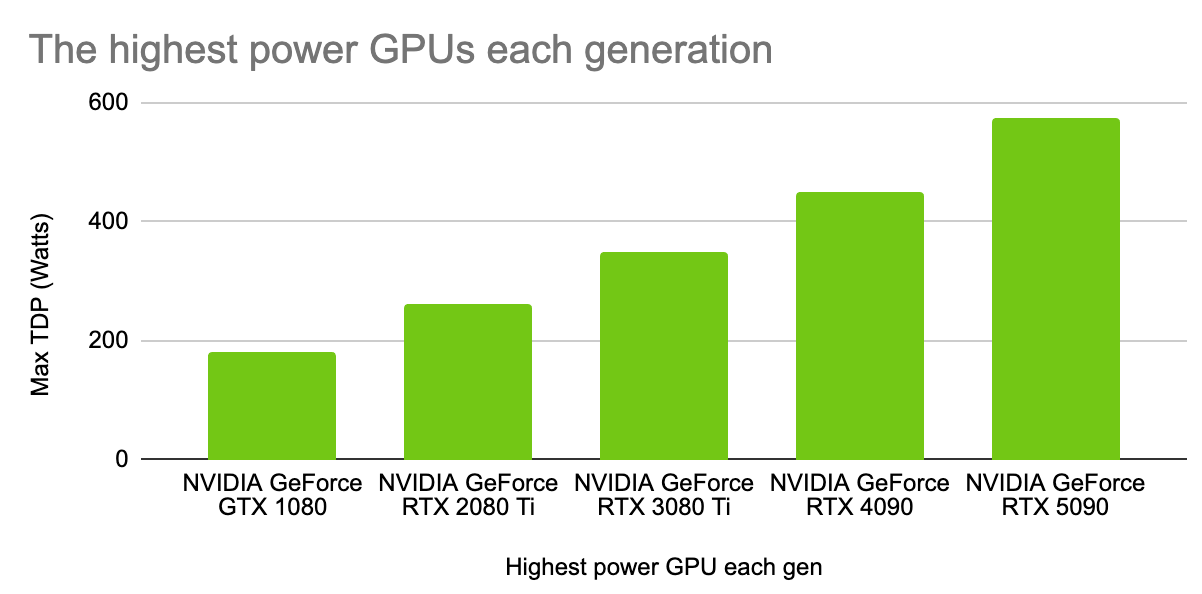

Lastly, let’s look at the most power-hungry card of each generation as this is perhaps the most telling of all, as it avoids some of the marketing guff and other Ti/SUPER/etc shenanigans. This is the maximum power that Nvidia can cram into a card over the years.

This puts things into perspective, I think. Gone are the weird up-and-down chops and changes across generations. Instead, it’s a steady drumbeat of upward-trending power consumption, the steady march of “progress” over the years. I probably don’t even need to say this, but the trend is not a sustainable one – not from any angle. Total recommended system power for a 5090 machine? 1000 watts. You can get smaller, more efficient heat pumps than that. Five hundred watts of heat is an enormous amount to dissipate into a room as well – I wouldn't want to run that in summer. Staggering stuff.

A few more observations from the Steam hardware dataset. The lowest, most frugal card in the list, which practically sips electricity like a straight chiller, is the GTX 1050 (1.14% of Steam users), which would only use a mere 134 kWh of power over a year (@1hr a day for a year, 70% capacity factor). That’s still $40 of electricity over a year of gaming in Australia, mind. The highest power consuming card appearing in the Steam hardware survey comes as absolutely no surprise however. It’s the NVIDIA GeForce RTX 4090 (1.18% of Steam users! Insane!), consuming a whopping 804.8 kWh (@1hr a day for a year, 70% capacity factor). That’s about $241 worth of electricity in Aussie Dollarydoos. I said it before, and I'll say it again: Yowza.

To approach something like a model of all these most popular Nvidia GPUs and energy consumption in total, we need to make some assumptions. Ideally, we would have an “average play-time per annum” figure for active Steam users. In lieu of that, we can use a simplified guesstimates from some public data and apply the same assumptions as above – that each GPU plays a game for 1 hour a day, every day of the year. The same caveat still applies: this might be an overestimate – I don’t know. But it’s an assumption that you can play around with and change yourself if you make a copy of the spreadsheet. Essentially, once we multiply the specific GPU TDP with our assumed hours of operation per year, we get a per GPU annual energy consumption figure, that we can combine with the percentage that GPU appears in the hardware survey and the total number of steam users.

But unfortunately, We still need to know what the total number of Steam players actually is – the hardware survey doesn’t say, and I assume there are some commercially sensitive dimensions to this figure, so we rely on some guesstimates again.

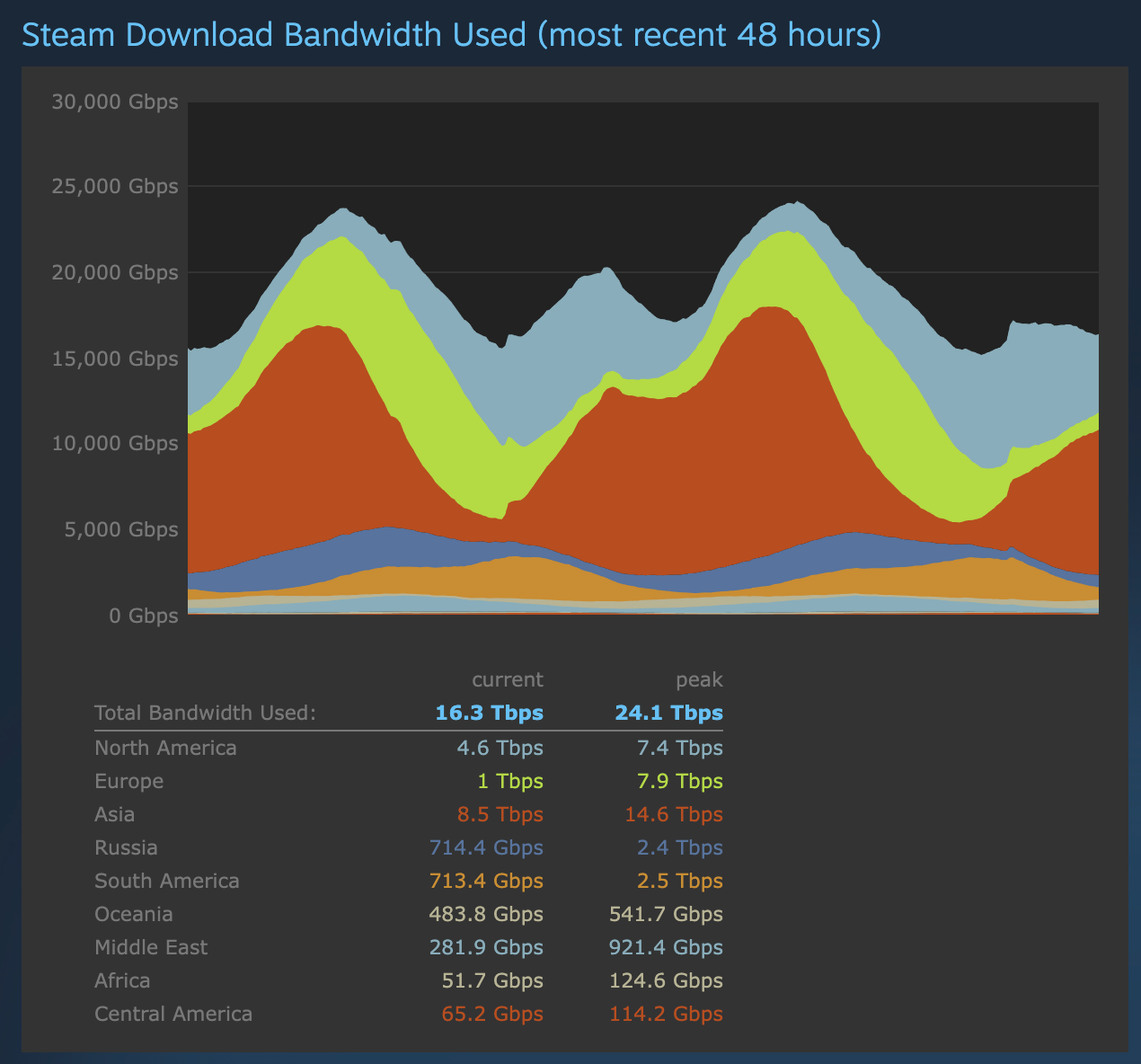

The first datapoint is a “peak players online” figure, taken from the Steam stats page: 35 million users online at peak. This might be an overestimate for our purposes, because lots of people will have Steam open on their computer without playing a game, but by the same token, not all players in a given 24 hours will be online simultaneously because of their distribution across the globe. The majority of Steam users seem to be distributed across North America, Europe and Asia (if the Steam bandwidth stats page is any indication – which I think it is).

The second possible figure informing our assumptions is peak players in-game, with this figure available from VGInsights, which claims 12.5 million Steam users at peak in-game in 2024. This is probably an undercount for our purposes, for the same reasons as above – not all players will be online at the same time in a 24-hour period, so this is probably our lower bound.

The third figure comes after doing some quick Googling for active user numbers for Steam, which produced a Daily Active User (DAU) figure of 69 million users. This seems like an overcount for our purposes – not every active user is going to be playing every day (I assume logging on is what makes a user “active” not playing – but I’m not really sure here). Alongside that DAU figure was reported a Monthly Active User (MAU) figure of 132 million users which complicates things even further. Are some of those users logging on just on weekends? Are the weekends when we see the peak daily users anyway? It’s possible! The only one who might truly know is Valve (drop me an email Valve if you wanna chat and run the numbers).

In any case, those are our ranges for possible Steam user numbers (with caveats and uncertainties for each). We can now apply our per-card annual power consumption figure to Nvidia GPU dataset and tally up the figures across the year. I’m just going to discuss the lowest and highest here, as the top and bottom bounds for this estimate.

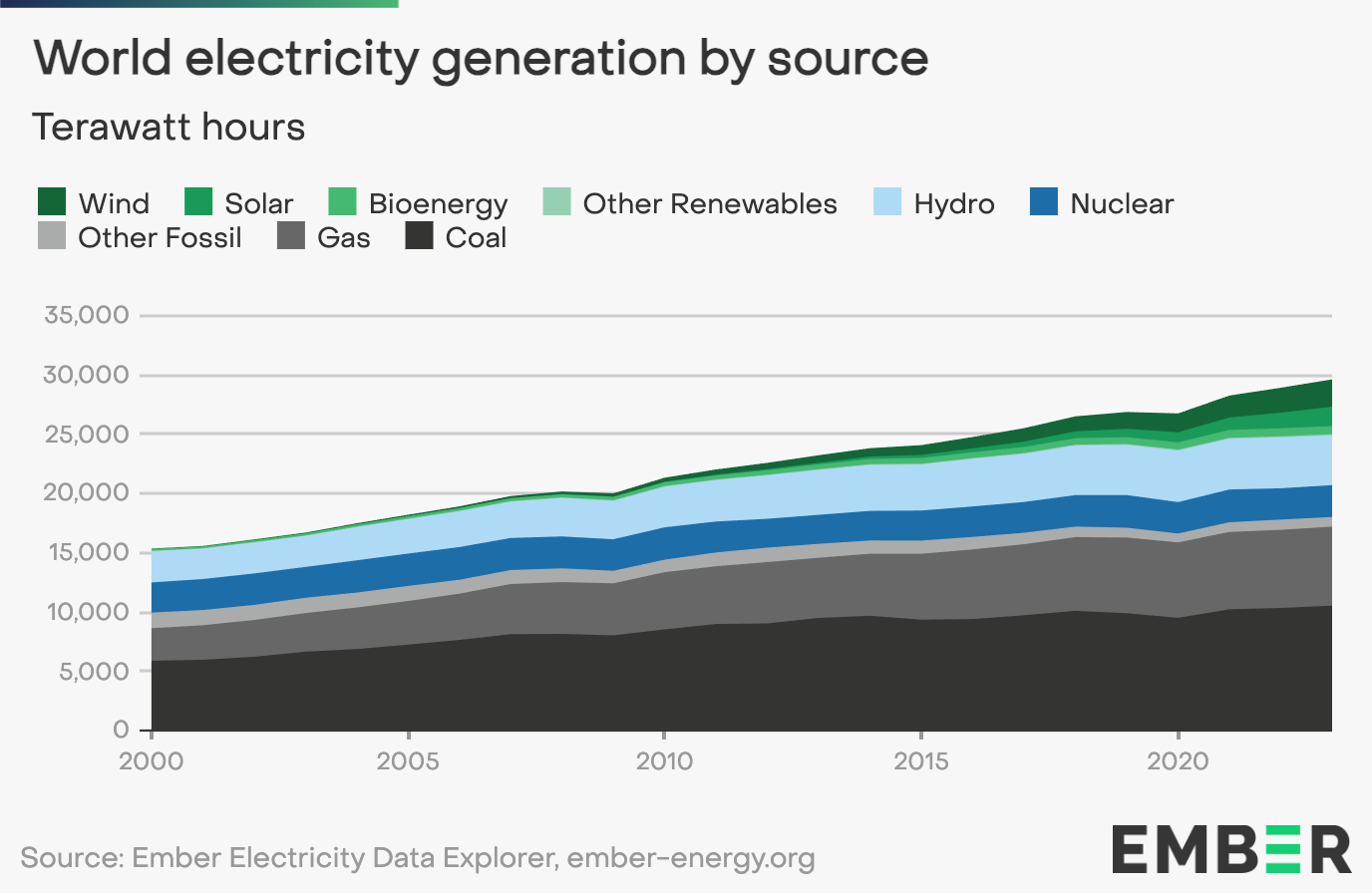

The lower figure we get is 2.9 billion kWh each year across the most popular GPUs. Another way to describe that is as 2.9 Terrawatt hours and we can put that in terms of the total global electricity generation in a year, using the Ember dataset. Here’s a chart.

In 2023, the world generated about 30,000 TWh of electricity, and if our lower number of 2.9 TWh for PC gaming GPUs is right, then that’s 0.0096% (repeating) – aaaaalmost 0.01% of global electricity used just by Nvidia GPUs. Bit of a wake up call.

What about the higher-end number? That would be using the MAU numbers, producing a figure of 31.3 TWh per annum. That’s an order of magnitude larger, and that would mean that Nvidia GPUs used around 0.1% of the entire planet’s electricity, and that's just for PC gaming on Steam. I said I'd say it again: Yowza.

Whatever the final number of players is, we now have a bit of a sense of the structure of power demand that exists and a sense of where it's heading. The fact that the 4090 is 1.18% of all Steam users seems terrifying to me – and the prospect of that many 5090s eventually being out there in the world gives me funny feelings in the pit of my stomach.

This exercise illustrates the scale of the decarbonisation challenge ahead of us – and shows how much harder it's being made by the most extreme pieces of tech. "But they're more efficient you get more performance out of them" – true enough, but at what cost? The truly worrying aspect is the challenge that net zero represents to companies like Nvidia, and to the many others across the games industry who rely on end users buying these graphics cards, and who fuel the graphics upgrade arms race. We need a new, sustainable business model.

The really scary thing for me is that, besides Nvidia itself, there’s really no one responsible for this challenge yet. The Nvidia sustainability corporate page is a bit threadbare tbh! And Valve doesn’t seem to be across this yet either – but they're they’re going to have to be soon. Mandatory climate reporting is coming to the US under California laws SB253 and SB261. This is happening! LA is on fire, and the demand for change is only getting louder – but we're still not moving in the right direction.

I haven’t even touched on what sort of CO2 emissions might come from this level of electricity demand yet because honestly, that’s another step more complicated – I’ve got some general numbers in the spreadsheet using a default global emissions factor but we can do better if we have a model of where most players are in the world – something for a future post perhaps.

I wish I had better news about this – I desperately long for the day when I can stop the whole Jeremiah act and be quietly confident that “we got this!” but all signs are pointing to another year of increasing emissions, increasing energy demand, increasing fossil fuel use. And in the meantime the world bakes, goes up in flames, gets drowned in floodwaters. Things have never been more urgent, and everything we do today to prevent emissions really does make a world of difference tomorrow.

Time to get cracking. We’re launching into it this year at the Sustainble Games Alliance and we have big plans for developing our GHG standard, great ideas for helping European companies with CSRD reporting, and I'm extremely keen to finally get down to work on finding real, actionable ways to reduce gaming’s footprint – if you’ve been considering joining, there's never been a better time. Get in touch.

Here’s the spreadsheet again if you want to check it out yourself.

And some of the sources I used for the spreadsheet: Nvidia’s compare page, and Wikipedia entries for past GeForce cards on the 10 series, 20 series, 30 series and 40 series. I also got some of the Steam DAU/MAU numbers from here, but I saw at least one other site repeat the same figures without other explicit sourcing so YMMV!

Thanks for reading GTG – back soon.